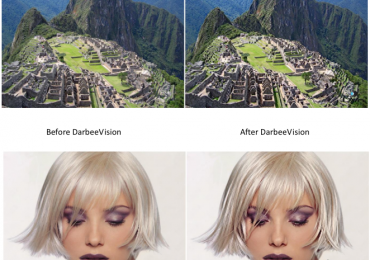

DarbeeVision Darbee Visual Presence Video Processing

Every now and then a technology comes along that changes the way we look at things—sometimes literally. That seems to be the case with DarbeeVision’s Darbee Visual Presence technology. Video processing hasn’t seen many changes except to facilitate moves towards 3D and 4K technologies. In essence they’ve been keeping up with teh times, but nothing revolutionary has occurred in making images look any better. Several years ago there was a move to enhance video through the use of frame interpolation and 120fps (frame per second) frame rates. That was cool, but it also made your feature films look like soap operas. Most people who like film turn those features off as soon as they get their TVs home.

Darbee Visual Presence Technology is one of the first “new” advances in video processing we’ve seen in a long time that doesn’t appear to be gimmicky. For one, it uses the power of processor-based computational ability to analyze images in real-time and refine the results. That means that pixel resolution and color depth aren’t the only game in town when it comes to image quality.

At least not anymore.

The Way Things Are Currently Done

Every television, projector or video processor currently works to improve the digital image fed into it. The thing is, those improvements are typically along the lines of contrast improvement, color depth enhancement, sharpness and noise filtering. And that’s about it. What Darbee Visual Presence, and the genre of computational image processing in general, is offering is the ability to utilize neuro-biologic principles to change the way monoscopic (non-3D) images convey depth and quality.

For years, pixels and colors have been the gold reference standard—and that’s all there really was. Now, computational image enhancement and processing is going to reveal a whole new way to process images and the information being looked at is far more resource-intensive, but it also yields far better results.

Computational Image Enhancement

If you apply models of how the eye sees, monoscopic images can be adjusted and manipulated to deliver much more depth and realism than previously thought. This is a wholly new area of image enhancement and, for once, it doesn’t appear to be smoke and mirrors. DarbeeVision, first and foremost, processes based on principles that take stereoscopic vision into account—but it applies the processing to a monoscopic image.

Here’s the point of contention that has everybody excited: DarbeeVision claims that all 2D televisions can be upgraded to feature what it is calling “Ultra HD” realism…without changing the display.

They are claiming a 4K viewing experience without the 4K.

DarbeeVision in a Nutshell

What DarbeeVision is attempting to do is create an image, on the fly, that looks more like what our brain would expect to see if we were looking at a true image. It is more or less displaying images in a way that is more like what our brain wants—or what it interprets as realistic.

But how?

For those technogeeks out there, DarbeeVision enhance monoscopic detail and depth cues by using parallax disparity as the basis for luminance modulation.

Say what?

It uses the principles of how we view things in three dimensions, using two eyes, to alter the way the luminance information in a video signal is displayed. The luminance channel of the video contains the resolution of an image and thus the detail. Artifacts (or errant pixels or problems) are avoided by the use of a sort of saliency mask, or contrast map of the image. Darbee Visual Presence actually uses real-time analysis to embed what the brain would consider to be more of a stereo image into the monoscopic picture. It’s the first real-time video processing system that’s based on a human-vision-based model.

Darbee Visual Presence processing makes 2D images take on more depth and realism—and it does it in real time at greater-thanHD processing resolution. Like the frame interpolation of 120Hz processing, DarbeeVision image processing is done “intra-frame”. That means you don’t end up lagging the video and you don’t need a huge buffer to get the job done. It’s fast and it’s efficient.

Video processing with Darbee Visual Presence is also resolution independent, which means it will work with a 720p, 1080p or 4K display.

How Does the Image Processing Work?

If it’s difficult to bring new technology to the masses for consumption, it’s even more difficult to explain what’s going on under the hood. But we’ll try. Darbee Visual Presence uses algorithms that compare frames with one another and then apply a defocus-and-subtract process to come up with a map of what features of the image have changes. Next, the system applies the change—but only to the areas it deems appropriate. Technologically speaking, DarbeeVision does this using a saliency map (a sort of grayscale contrast map) which they dub “Perceptor”. I’m waiting for them to sponsor a robot in the next Transformers movie.

All of this transformation is occurring in real-time and on a pixel-by-pixel basis. And the results of the processing are given over to the resulting pixel, so the video processing system can take place on a television, Blu-ray player, AV receiver or even an external processor. The local cable TV provider cable or satellite provider could use this to make their images stand out more than the competition. Heck, Blu-ray mastering may change and movie content may start to be issued with DarbeeVision preprocessing.

How Did Darbee Visual Presence Processing Come About?

Like many good inventions, this one was stumbled upon, but not by accident, and not quickly. Back in the 1970s, Paul Darbee was experimenting with getting 2D images to look more like stereoscopic images and was playing around with two cameras separated by approximately the distance between the average human eyes. These tests were all analogue at the time, of course, but his goal was to combine two images into one and better simulate stereoscopic viewing.

At some point, Paul ended up defocusing one of the cameras, inverting the resulting image and feeding it to the other through an analogue video synthesizer. The resulting picture, while not perfect, showed enhanced depth characteristics and greater separation. Details were easier to see as well.

Photoshop experts will recognize the inherent principles of the technology as they have been using the “unsharp mask” filter for some time. Ramp that up, convert it to real-time and make it capable of supporting and analyzing HD content and you’ve got the hinting (though incomplete) of the basic DarbeeVision solution. Perhaps that’s a tad oversimplifying things, but you get the idea. The real trick was dealing with noise and unnecessarily sharp edge detail.

Darbee Visual Presence Technology Now

Paul Darbee’s 1970s experiment required a source for 3D content. Until recently that, and the processing requirements for the technology, made it a difficult path to bring to mainstream. There was also the issue of ma,ing sure all artifacts could be squashed and dealt with. DarbeeVision received a patent for the new process in 2006 and 2011. The patent covers the areas of embedding disparity depth cues into 2D images, a computationally inexpensive parallax disparity generator, and a very fast, very accurate saliency mask.

DarbeeVision Technology is now starting to show up in devices all across the consumer electronics industry. It is most definitely the “next best thing”. Look for it, in particular, in the upcoming Oppo BDP-103D Blu-ray player which debuted at the 2013 CEDIA Expo.

Hello Clint, I am desperately searching for an equivalent device that would do frame interpolation.

Do you know any ?